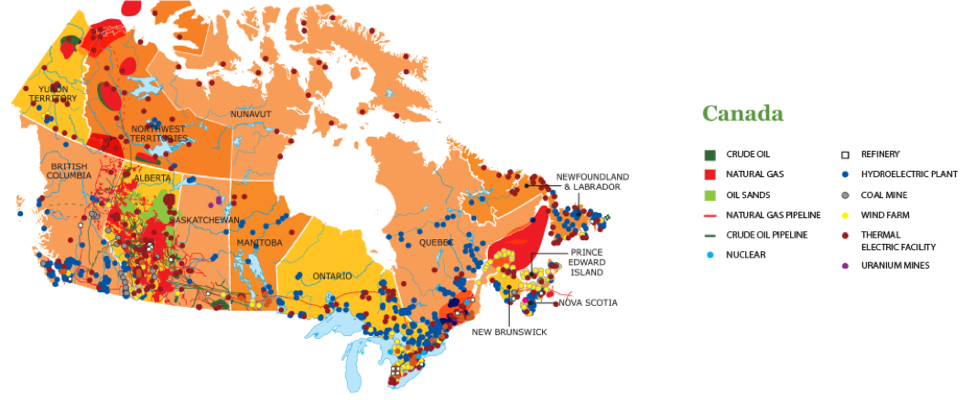

CANADIAN ENERGY AT A GLANCE

Canada has many energy sources and infrastructures (www.centreforenergy.com)

We examine and visualize Canadian conversations about energy to support broader energy policy discussions in Canada and beyond. We developed lists of keywords to describe each energy, as search terms, to create our text corpora about: incumbent and nonincumbent energy sources, technologies, projects, companies, regulators, communities, environmental groups, and other stakeholders. From this we can examine speakers’ topic/cluster analysis, sentiment, geography, timeseries, network analysis, and values expressed.

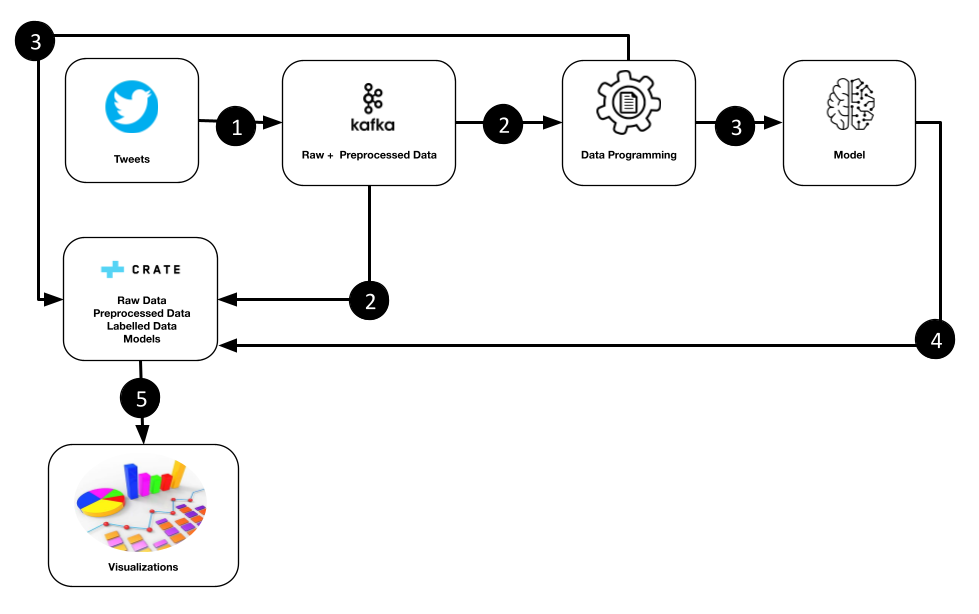

At this point, we are analyzing Twitter data. We gather tweets from Canadian handles and/or about Canadian energy. We take this ‘raw’ data, ‘clean’ the meta-data (time, geography, key words, etc.), program and model this using personal values, emotion, and other dictionaries. Which is then used to visualize conversations over time and geographies (Superset). This is all saved in a database (CrateDB). There is more information on our methods, dictionaries, etc. on each of the associated pages.

- Tweets are transferred to a Kafka topic in raw form.

- Raw tweets are stored in Crate. Tweets in topic are cleaned and preprocessed to be transferred to the data programming step.

- Preprocessed tweets are labelled and stored in Crate. Training/Testing data extracted from Crate is used for model creation.

- Model experiments are evaluated to pick the best and a reference of the model is stored in Crate.

- Preprocessed tweets filtered by dictionary: personal values, humour and sentiment are shown in informative visualizations to show insights about the data.

General description:

MAPPING AND MEASURING BASIC HUMAN VALUES

Values are typically defined as abstract goals or guiding principles in people’s lives (Maio, 2016; Schwartz, 1992): “concepts or beliefs … pertain to desirable end states or behaviors, transcend specific situations, guide selection or evaluation of behavior and events, and are ordered by relative importance”

Modeling values as a two‐dimensional structure (social vs. personal, anxiety-free vs. anxiety-avoidance) has been replicated in many cross‐sectional and experimental studies conducted in over 80 countries (Bilsky et al., 2011; Schwartz et al., 2012).

Values predict prejudice (Wolf et al., 2019), protest action (Mayton & Furnham, 1994), environmental behaviour (Hurst et al., 2013), and collective responses like with COVID-19 (Wolf et al., 2020).

Each value has an associated dictionary, which can be used to measure/model the values expressed, combinations of values, similarities, and differences

Self-Direction

Derived from organismic needs for control and mastery and interactional requirements of autonomy and independence. The motivational goal is independent thought and action. Associations: creativity, freedom, choosing own goals, curious, independent, self-respect.

Stimulation

Derived from the presumed organismic need for variety and stimulation in order to maintain an optimal level of activation. The motivational goal is excitement, novelty, and challenge in life. Associations: an exciting life, a varied life, daring.

Hedonism

Derived from organismic needs and the pleasure associated with satisfying them. The motivational goal is pleasure or sensuous gratification for oneself. Associations: pleasure, enjoying life.

Achievement

Derived from competent performance being a requirement if individuals are to obtain resources for survival and if social interaction and institutional functioning are to succeed. The motivational goal is personal success through demonstrating competence according to social standards. Associations: ambitious, influential, capable, successful, intelligent, self-respect.

Power

Derived from the functioning of social institutions apparently requiring some degree of status differentiation; to justify this fact of social life, and to motivate group members to accept it, groups must treat power as a value. May also be transformations of the individual needs for dominance and control. The motivational goal is attainment of social status and prestige, and control or dominance over people and resources. Associations: social power, wealth, authority, preserving my public image, social recognition.

Security

Derived from basic individual and group requirements. The motivational goal is safety, harmony, and stability of society, of relationships, and of self. Associations: national security, reciprocation of favors, family security, sense of belonging, social order, healthy, clean.

Conformity

Derived from the requirement that individuals inhibit inclinations that might be socially disruptive if interaction and group functioning are to run smoothly. The motivational goal is restraint of actions, inclinations, and impulses likely to upset or harm others and violate social expectations or norms. Associations: obedient, self-discipline, politeness, honoring of parents and elders.

Tradition

Derived from traditional modes of behaviour becoming symbols of the group’s solidarity, expressions of its unique worth, and presumed guarantors of its survival. The motivational goal is respect, commitment, and acceptance of the customs and ideas that one’s culture or religion impose on the individual. Associations: respect for tradition, devout, accepting my portion in life, humble, moderate.

Benevolence

Derived from the need for positive interaction in order to promote the flourishing of groups and from the organismic need for affiliation. The motivational goal is preservation and enhancement of the welfare of people with whom one is in frequent personal contact. Associations: helpful, responsible, forgiving, honest, loyal, mature love, true friendship.

Universalism

Derived from those survival needs of groups and individuals that become apparent when people come into contact with those outside the extended primary group and become aware of the scarcity of natural resources. People may then realize that failure to accept others who are different and treat them justly will lead to life-threatening strife, and failure to protect the natural environment will lead to the destruction of the resources on which life depends. The motivational goal is understanding, appreciation, tolerance, and protection for the welfare of all people and for nature. Associations: equality, unity with nature, wisdom, a world of beauty, social justice, broad-minded, protecting the environment, a world at peace.

MODELING HUMAN VALUES

We use various modelling methods to label, classify and further visualize tweets.

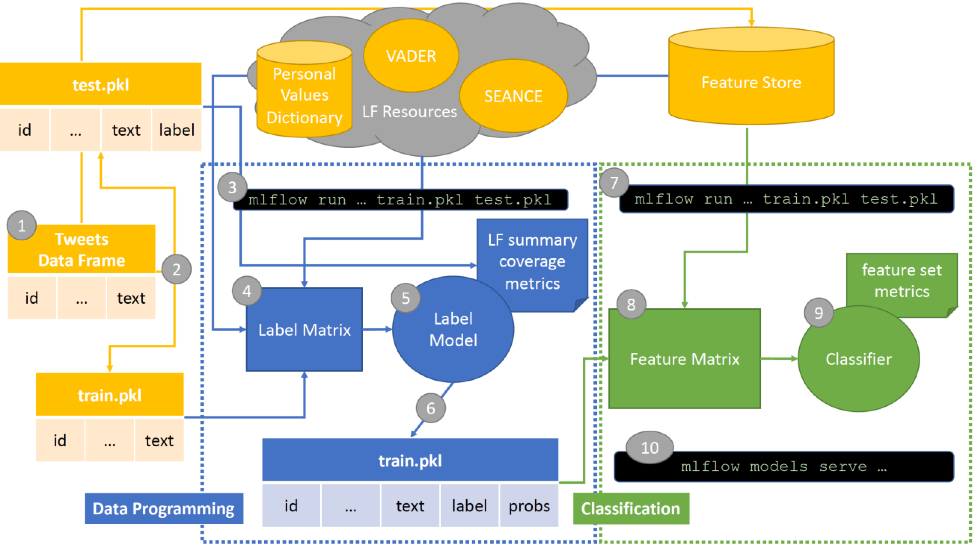

MODEL DEVELOPMENT

- Sample some data. The data could be the tweets related to energy in Canada that have been scraped, and/or it could be some datasets that we have gathered, such as the blog authorship corpus from Kaggle. The sampling method is determined by the classification task, such as universal personal values classification.

- Split the sample into train and test sets. The test set will be loaded into Label Studio (not pictured) for annotation from domain experts. The train set will be sent to the data programming pipeline.

- This command runs the data programming pipeline. It can be configured to select train parameters for the label model, whether or not there is a labeled test set created in step 2 to use in calculating class balance and further evaluating the labeling function and label model accuracy, the train set created in step 2, and the classification task that the train data is for.

- The labeling functions use the LF Resources to heuristically label the train data. Each labeling function votes for its respective label, generating the columns of the label matrix, over all of the data points in the train data, generating the rows of the label matrix.

- This label matrix is used as input to the label model, which models the correlation and conflicts of the labeling functions, de-noising the heuristic labels and approximating the true label.

- The label model generates two new columns for the train set, label and probs. Label is the predicted true label for that data point, and probs is the probability distribution over all classes, where the label model selects the class with max probability in the multiclass case and classes above the knee in the probability distribution in the multilabel case. This train data is then evaluated for completeness, and all artifacts from the data programming pipeline including the label model metrics and labeling function summary are available to view using the Mlflow UI.

- Once it is determined that the train data is of good quality, it is fed into the classification pipeline, which is run with this command. It can be configured to use different algorithms such as multinomial logistic regression and multilayer perceptron, depending on the classification task and whether it is multilabel or multiclass.

- The feature matrix is some combination of feature vectors generated by algorithms such as the Universal Sentence Encoder and TF-IDF, where the desired features are chosen at step 7. Feature matrices are created for the train set and test set. The test set will ideally be the human-annotated data to measure external validity, but in the case that manual annotation has not been completed, the train data can be split into train and test sets (and test can further be divided into test and validation if needed for the classification algorithm, such as for early stopping).

- The train set feature matrix created in step 8 and the train labels or probs (depending on the algorithm) created in step 6 are used as input to the classification algorithm chosen at step 7. The end model that is created through the training process is then validated using the test data. As with the data programming pipeline, all metrics and artifacts can be viewed using the Mlflow UI. The classification steps 7-9 are repeated until a good quality model is obtained.

- Once a good model of the classification task is obtained, it is served with a dedicated prediction API endpoint for that particular classification task. This endpoint is used to obtain predictions for all relevant datapoints.

Using this model creation process, we can quickly include new data types such as images, test new LF Resources, add or modify classification tasks, and experiment with features to feed into the model, all without being constrained by available labelled data or existing models. This process is particularly useful for a multimodal approach to studying a variety of communication concepts where the data is constantly evolving.